PyCon APAC 2015, 2015-06-07

HDF5 Use Case

Esc to overview

← → to navigate

Liang Bo Wang (亮亮), 2015-06-07

By Liang2 under CC 4.0 BY license

Esc to overview

← → to navigate

Wrong place. Talk of click detection by Vpon or sentimental analysis by Willy suits better. Seriously.

Unexpected code fight and online judge

.mat file bases on HDF5

import h5py

f = h5py.File("weather.hdf5", "a")

f['/taipei_1/humidity'] = np.array(...)

f['/taipei_1/humidity'].attrs['rec_date'] = utc_timestamp

f['/taipei_1/temperature'] = np.array(...)

dataset = f['/taipei_5/humidity']

'''f['/taipei_1/these_days/humidity']

---------------------========

group group dataset

'''

tpe_grp = f.create_group('taipei_1')

these_days = tpe_grp.create_group('these_days')

humidity = these_days.create_dataset('humidity', ...)

dset = f.create_dataset(

"demo",

(10, 10), # dset.shape, can be multi-dim

dtype=np.float64, # dset.dtype like Numpy

fillvalue=42 # default value

)

dset[:5, :5] = np.arange(25).reshape((5, -1))

out = dset[:5, :5]

# More efficiently

out = np.empty((100, 50), dtype=np.float32)

dset.read_direct(out, np.s_[:, 0:50])

f.create_dataset(

'classA_heart_beat',

(100, 3600), # 1hr heart rate rec for every 1sec

# 1 chunk = 8 * 3600 bytes = 28.8KB

chunks=(1, 3600), dtype=np.int8)

create_dataset(.., compression="gzip", shuffle=True)

dset = f.create_dataset(...)

dset.attrs['title'] = 'PyCon APAC'

dset.attrs['year'] = 2015

>>> dt = h5py.special_dtype(vlen=str)

>>> dt

dtype(('|O4', [(({'type': }, 'vlen'), '|O4')]))

class MyConfig:

def __init__(self, ...):

self.x = x

self.y = y

# ...

c = MyConfig()

pickle.dump(c)

c = pickle.load(pickle_pth)

.npy, .npz are bascially a ZIP format.

>>> np.save('/tmp/123', np.array([[1, 2, 3], [4, 5, 6]]))

>>> np.load('/tmp/123.npy')

array([[1, 2, 3],

[4, 5, 6]])

Some optimization comparision on their official site

Back on this later. Simply put, it reduces the cross-platform ability

df.to_hdf5(...) with PyTables

Something like this

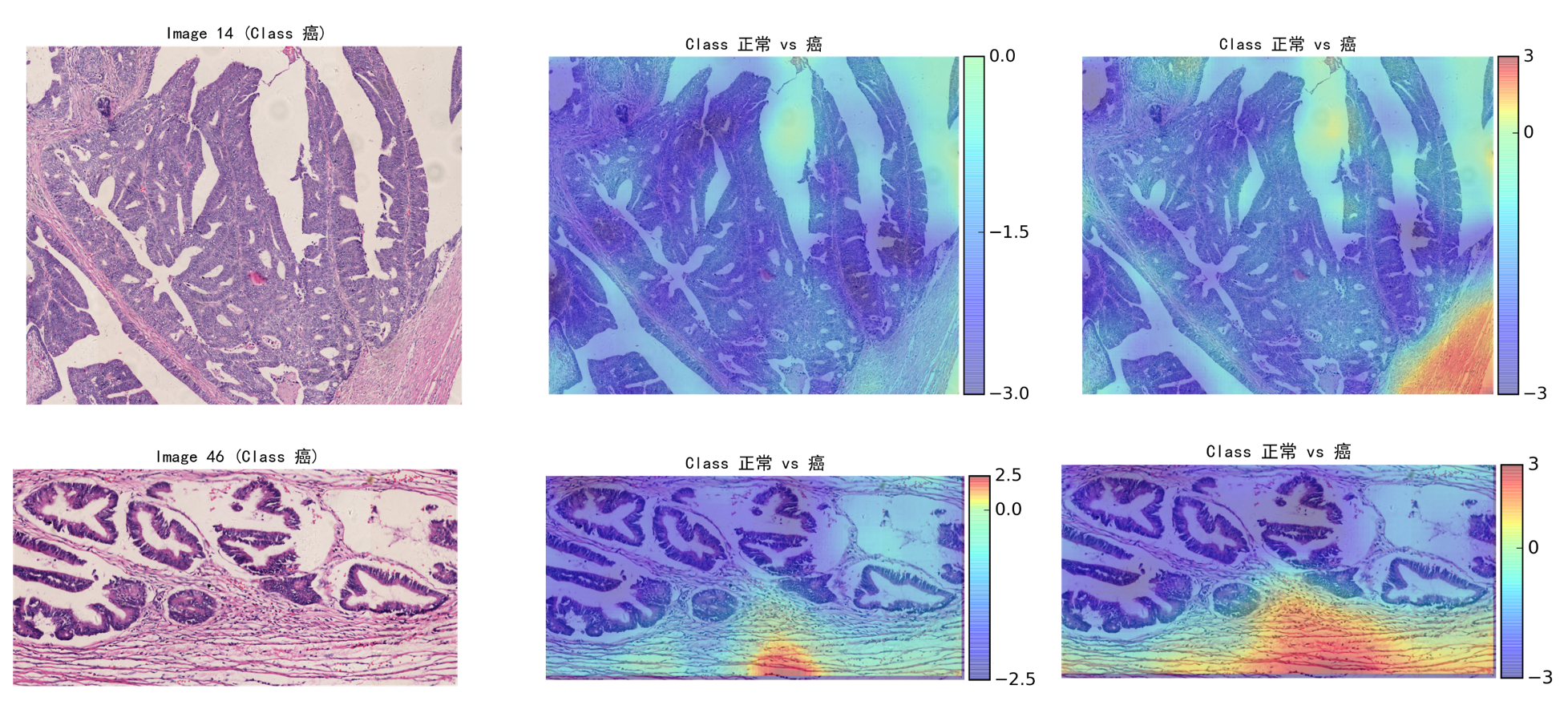

with h5py.File(hdf5_pth, 'w') as f:

for batch in img_batches:

region_data = f['raw_img_xx'][batch.region]

# ...

f['heatmap_img_xx'][batch.region] = outcome

Input viewed as 32 width x 32 height x 3 channels (depth)

Output viewed as 1 x 1 x 10. Spatial information is preserved.

# evaluate loss and gradient, internally use self.W

loss, grad = self.loss(X_batch, y_batch, reg)

loss_history.append(loss)

# perform parameter update

self.W -= grad * learning_rate

Animations that may help your intuitions about the learning process dynamics. Left: Contours of a loss surface and time evolution of different optimization algorithms. Notice the "overshooting" behavior of momentum-based methods, which make the optimization look like a ball rolling down the hill. Right: A visualization of a saddle point in the optimization landscape, where the curvature along different dimension has different signs (one dimension curves up and another down). Notice that SGD has a very hard time breaking symmetry and gets stuck on the top. Conversely, algorithms such as RMSprop will see very low gradients in the saddle direction. Tue to the denominator term in the RMSprop update, this will increase the effective learning rate along this direction, helping RMSProp proceed.

Ref: CS231n Note: Neural Network 3 and Image credit: Alec Radford.